Terraform

tofu state list | grep '^kubernetes_' | xargs -n1 terraform state rmterraform state pull > backup.tfstateFalls etwas schiefgeht, kannst du den State mit terraform state push wiederherstellen.

Zuerst muss ein Service Principal erstellt werden damit in einer Ci/CD Pipeline automatisch eingeloggt werden kann

az login

az account set --subscription "your-subscription-id"

az ad sp create-for-rbac --name "<your-sp-name>" --role="Contributor" --scopes="/subscriptions/<your-subscription-id>"

Es kann auch ein Servie Principal für eine Resource Group erstellt werden:

az ad sp create-for-rbac --name "sp-pipeline" --role="Contributor" --scopes="/subscriptions/xxxx-xxxx-44f0-8fbe-xxxxx/resourceGroups/pg_xxxxx-171"Hier die Ausgaben

{

"appId": "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx",

"displayName": "<your-sp-name>",

"password": "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx",

"tenant": "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx"

}Diese Anmeldeinformationen können als Umgebaungsvariablen für Terraform gesetzt werden:

export ARM_CLIENT_ID="xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx"

export ARM_CLIENT_SECRET="xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx"

export ARM_SUBSCRIPTION_ID="xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx"

export ARM_TENANT_ID="xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx"In einer gitlab Pipeline könnte es so aussehen:

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v2

- name: Set up Terraform

uses: hashicorp/setup-terraform@v1

- name: Azure Login

env:

ARM_CLIENT_ID: ${{ secrets.ARM_CLIENT_ID }}

ARM_CLIENT_SECRET: ${{ secrets.ARM_CLIENT_SECRET }}

ARM_SUBSCRIPTION_ID: ${{ secrets.ARM_SUBSCRIPTION_ID }}

ARM_TENANT_ID: ${{ secrets.ARM_TENANT_ID }}

run: terraform initDie Terraform main.tf anlegen (Terrafom wird noch lokal ausgeführt)

Danach ein terraform apply um die VM zu deployen

# Anbieter-Konfiguration

provider "azurerm" {

features {}

}

# Verwende die bestehende Ressourcengruppe

data "azurerm_resource_group" "existing_rg" {

name = "rg_xxxxxxxx-171" # Deine bestehende Resource Group

}

# Virtuelles Netzwerk

resource "azurerm_virtual_network" "vnet" {

name = "myVnet"

address_space = ["10.0.0.0/16"]

location = data.azurerm_resource_group.existing_rg.location

resource_group_name = data.azurerm_resource_group.existing_rg.name

}

# Subnetz

resource "azurerm_subnet" "subnet" {

name = "mySubnet"

resource_group_name = data.azurerm_resource_group.existing_rg.name

virtual_network_name = azurerm_virtual_network.vnet.name

address_prefixes = ["10.0.1.0/24"]

}

# Netzwerkschnittstelle

resource "azurerm_network_interface" "nic" {

name = "myNic"

location = data.azurerm_resource_group.existing_rg.location

resource_group_name = data.azurerm_resource_group.existing_rg.name

ip_configuration {

name = "internal"

subnet_id = azurerm_subnet.subnet.id

private_ip_address_allocation = "Dynamic"

}

}

# Virtuelle Maschine

resource "azurerm_linux_virtual_machine" "vm" {

name = "myVM"

resource_group_name = data.azurerm_resource_group.existing_rg.name

location = data.azurerm_resource_group.existing_rg.location

size = "Standard_B1s"

admin_username = "azureuser"

admin_password = "Password4321"

network_interface_ids = [azurerm_network_interface.nic.id]

os_disk {

caching = "ReadWrite"

storage_account_type = "Standard_LRS"

}

source_image_reference {

publisher = "Canonical"

offer = "UbuntuServer"

sku = "18.04-LTS"

version = "latest"

}

}

# Öffentliche IP-Adresse

resource "azurerm_public_ip" "public_ip" {

name = "myPublicIP"

location = data.azurerm_resource_group.existing_rg.location

resource_group_name = data.azurerm_resource_group.existing_rg.name

allocation_method = "Dynamic"

}

# Sicherheitsgruppe für die VM

resource "azurerm_network_security_group" "nsg" {

name = "myNSG"

location = data.azurerm_resource_group.existing_rg.location

resource_group_name = data.azurerm_resource_group.existing_rg.name

security_rule {

name = "SSH"

priority = 1001

direction = "Inbound"

access = "Allow"

protocol = "Tcp"

source_port_range = "*"

destination_port_range = "22"

source_address_prefix = "*"

destination_address_prefix = "*"

}

}

# Netzwerkschnittstelle mit Sicherheitsgruppe verknüpfen

resource "azurerm_network_interface_security_group_association" "nic_nsg" {

network_interface_id = azurerm_network_interface.nic.id

network_security_group_id = azurerm_network_security_group.nsg.id

}Damit Terraform auch in der Pipeline deployed werden kann, muss der tfstate dort liegen, bzw. es muss auf ein gemeinsames Backend zugegriffen werden das den Terraform State enthält.

Dazu wird ein Storage in Azure erstellt indem das File gespeichert werden kann. Dies kann auch über terraform geschehen, dazu die main.tf mit der Storage Ressource erweitern und noch einmal neu mit terraform apply deployen:

# Storage Account erstellen

resource "azurerm_storage_account" "storage" {

name = "tfstatedemovm"

location = data.azurerm_resource_group.existing_rg.location

resource_group_name = data.azurerm_resource_group.existing_rg.name

account_tier = "Standard"

account_replication_type = "LRS"

}

# Storage Container erstellen

resource "azurerm_storage_container" "container" {

name = "tfstate"

storage_account_name = azurerm_storage_account.storage.name

container_access_type = "private"

}

output "storage_account_name" {

value = azurerm_storage_account.storage.name

}

output "container_name" {

value = azurerm_storage_container.container.name

}Jetzt kann auf das Azue Storage Backend umgestellt weden, dazu folgenden Block der main.tf hinzufügen:

terraform {

backend "azurerm" {

resource_group_name = "rg-xxxxxxx-171"

storage_account_name = "tfstatedemovm"

container_name = "tfstate"

key = "terraform.tfstate"

}

}Ein terraform init würde jetzt merken das die Backend Configuration geändert wurde und vorschlagen das mit terraform init -migrate-state das Terraform State in das Azure Backend migriert werden kann:

$ terraform init

Initializing the backend...

╷

│ Error: Backend configuration changed

│

│ A change in the backend configuration has been detected, which may require migrating existing state.

│

│ If you wish to attempt automatic migration of the state, use "terraform init -migrate-state".

│ If you wish to store the current configuration with no changes to the state, use "terraform init -reconfigure".

╵

$ terraform init -migrate-state

Initializing the backend...

Terraform detected that the backend type changed from "local" to "azurerm".

Do you want to copy existing state to the new backend?

Pre-existing state was found while migrating the previous "local" backend to the

newly configured "azurerm" backend. An existing non-empty state already exists in

the new backend. The two states have been saved to temporary files that will be

removed after responding to this query.

Previous (type "local"): /tmp/terraform3111667706/1-local.tfstate

New (type "azurerm"): /tmp/terraform3111667706/2-azurerm.tfstate

Do you want to overwrite the state in the new backend with the previous state?

Enter "yes" to copy and "no" to start with the existing state in the newly

configured "azurerm" backend.

Enter a value: yes

Successfully configured the backend "azurerm"! Terraform will automatically

use this backend unless the backend configuration changes.

Initializing provider plugins...

- Reusing previous version of hashicorp/azurerm from the dependency lock file

- Using previously-installed hashicorp/azurerm v4.1.0

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.Jetzt muss noch eine Service Connection angelegt werden.

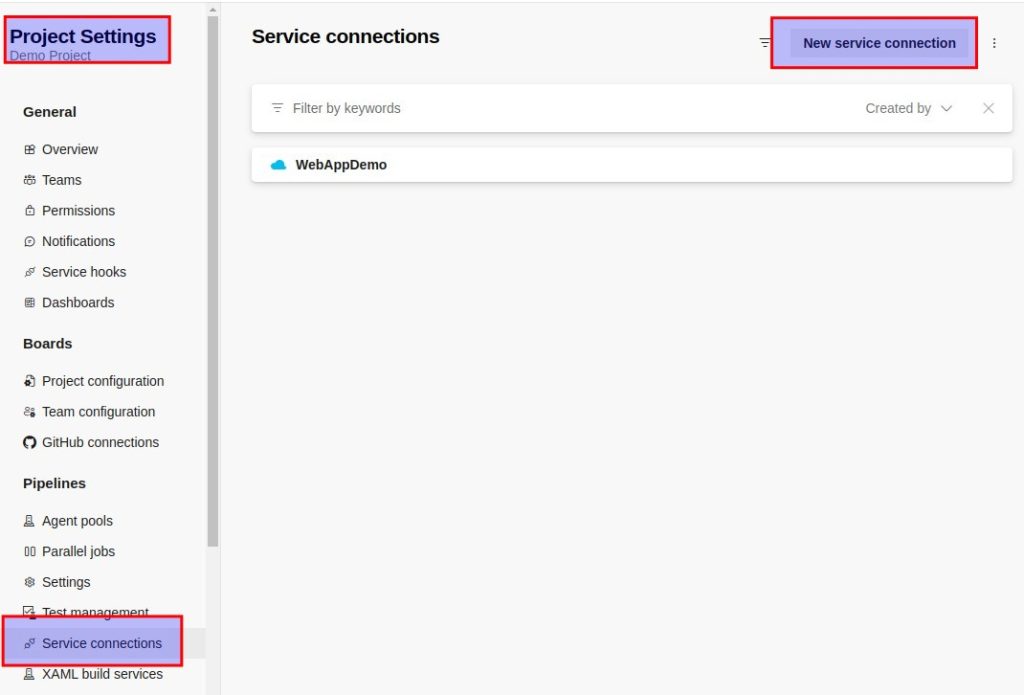

In den Project Settings auf Service Connection klicken

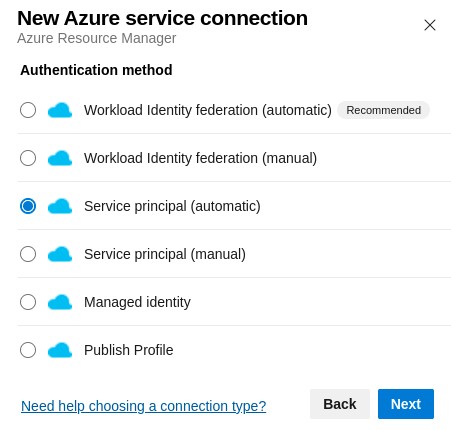

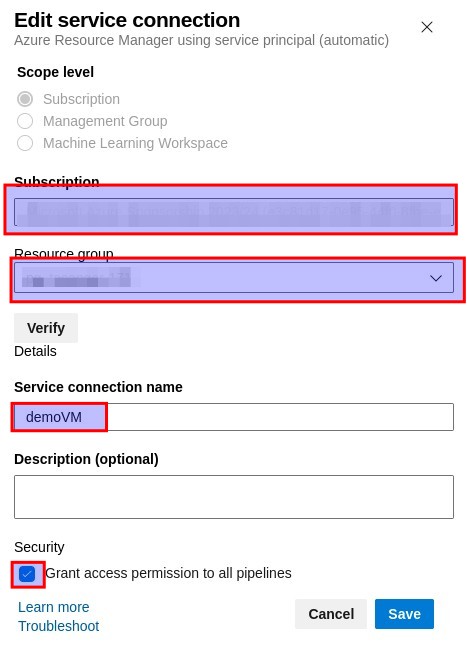

Auf „Azure Resource Manager“ klicken und dann auf „Service principal (automatic)“

Eine azure-pipelines.yml anlegen

Die azureSubscription ist der Name der soeben angelegten Service Connection (demoVM)

trigger:

- main # Oder dein Branch-Name

pool:

vmImage: 'ubuntu-latest'

variables:

ARM_CLIENT_ID: $(servicePrincipalId)

ARM_CLIENT_SECRET: $(servicePrincipalKey)

ARM_SUBSCRIPTION_ID: $(subscriptionId)

ARM_TENANT_ID: $(tenantId)

steps:

- checkout: self

- task: AzureCLI@2

inputs:

azureSubscription: 'demoVM' # Der Name der Azure Service Connection in Azure DevOps

scriptType: 'bash'

scriptLocation: 'inlineScript'

inlineScript: |

# Installiere Terraform

curl -o- https://raw.githubusercontent.com/tfutils/tfenv/master/install.sh | bash

export PATH="$HOME/.tfenv/bin:$PATH"

tfenv install latest

tfenv use latest

# Terraform Befehle ausführen

terraform init

terraform plan -out=tfplan

terraform apply -auto-approve tfplan

workingDirectory: '$(System.DefaultWorkingDirectory)/terraform'Datei mit kms verschlüsseln:

DEK=$(aws kms generate-data-key --key-id ${KMS_KEY_ID} --key-spec AES_128)

DEK_PLAIN=$(echo $DEK | jq -r '.Plaintext' | base64 -d | xxd -p)

DEK_ENC=$(echo $DEK | jq -r '.CiphertextBlob')

# This key must be stored alongside the encrypted artifacts, without it we won't be able to decrypt them

base64 -d <<< $DEK_ENC > key.enc

openssl enc -aes-128-cbc -e -in ${TARGET}.zip -out ${TARGET}.zip.enc -K ${DEK_PLAIN:0:32} -iv 0

hex string is too short, padding with zero bytes to length

task: [artifacts:encrypt] rm -rf ${TARGET}.zipDatei mit KMS entschlüsseln:

DEK=$(aws kms decrypt --ciphertext-blob fileb://key.enc --output text --query Plaintext | base64 -d | xxd -p)

openssl enc -aes-128-cbc -d -K ${DEK:0:32} -iv 0 -in ${TARGET}.zip.enc -out ${TARGET}.zipeventbridge.tf:

data "aws_subnet" "subnet_id_A" {

filter {

name = "tag:aws:cloudformation:logical-id"

values = ["PrivateSubnetA"]

}

}

data "aws_subnet" "subnet_id_B" {

filter {

name = "tag:aws:cloudformation:logical-id"

values = ["PrivateSubnetB"]

}

}

data "aws_subnet" "subnet_id_C" {

filter {

name = "tag:aws:cloudformation:logical-id"

values = ["PrivateSubnetC"]

}

}

### Lambda Function

## Create Lambda Role

resource "aws_iam_role" "announcement-nodegroup-rollout" {

name = "announcement-nodegroup-rollout"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "sts:AssumeRole",

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Effect": "Allow",

"Sid": ""

}

]

}

EOF

}

##

## Create LogGroup

resource "aws_cloudwatch_log_group" "announcement-nodegroup-rollout" {

name = "/aws/lambda/announcement-nodegroup-rollout"

retention_in_days = 7

}

resource "aws_iam_policy" "announcement-nodegroup-rollout" {

name = "announcement-nodegroup-rollout"

path = "/"

description = "IAM policy for logging from a lambda"

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": "arn:aws:logs:*:*:*",

"Effect": "Allow"

},

{

"Effect": "Allow",

"Action": [

"ec2:CreateNetworkInterface",

"ec2:DeleteNetworkInterface",

"ec2:DescribeNetworkInterfaces"

],

"Resource": "*"

}

]

}

EOF

}

resource "aws_iam_role_policy_attachment" "announcement-nodegroup-rollout" {

role = aws_iam_role.announcement-nodegroup-rollout.name

policy_arn = aws_iam_policy.announcement-nodegroup-rollout.arn

}

##

resource "aws_lambda_function" "announcement-nodegroup-rollout" {

description = "Send Nodegroup Rollout Announcement to Teams"

filename = data.archive_file.lambda_zip.output_path

source_code_hash = data.archive_file.lambda_zip.output_base64sha256

function_name = "announcement-nodegroup-rollout"

role = aws_iam_role.announcement-nodegroup-rollout.arn

timeout = 180

runtime = "python3.12"

handler = "lambda_function.lambda_handler"

memory_size = 256

vpc_config {

subnet_ids = [data.aws_subnet.subnet_id_A.id, data.aws_subnet.subnet_id_B.id, data.aws_subnet.subnet_id_C.id]

security_group_ids = lookup(var.security_group_ids, var.stage)

}

environment {

variables = {

stage = var.stage

webhook_url = var.webhook_url

}

}

tags = {

Function = "announcement-nodegroup-rollout"

Customer = "os/tvnow/systems"

CustomerProject = "r5s-announcements"

}

}

data "archive_file" "lambda_zip" {

type = "zip"

source_dir = "${path.module}/lambda-nodegroup-rollout-source/package"

output_path = "${path.module}/lambda-nodegroup-rollout-source/package/lambda_function.zip"

}

###

resource "aws_lambda_permission" "announcement-nodegroup-rollout" {

statement_id = "AllowExecutionFromEventBridge"

action = "lambda:InvokeFunction"

function_name = aws_lambda_function.announcement-nodegroup-rollout.function_name

principal = "events.amazonaws.com"

source_arn = module.eventbridge.eventbridge_rule_arns["logs"]

}

module "eventbridge" {

source = "terraform-aws-modules/eventbridge/aws"

version = "v2.3.0"

create_bus = false

rules = {

logs = {

name = "announcement-UpdateNodegroupVersion"

description = "announcement-UpdateNodegroupVersion"

event_pattern = jsonencode({ "detail" : { "eventName" : ["UpdateNodegroupVersion"] } })

}

}

targets = {

logs = [

{

name = "announcement-UpdateNodegroupVersion"

arn = aws_lambda_function.announcement-nodegroup-rollout.arn

}

]

}

}lambda_function.py:

import urllib3

import json

import os

class TeamsWebhookException(Exception):

"""custom exception for failed webhook call"""

pass

class ConnectorCard:

def __init__(self, hookurl, http_timeout=60):

self.http = urllib3.PoolManager()

self.payload = {}

self.hookurl = hookurl

self.http_timeout = http_timeout

def text(self, mtext):

self.payload["text"] = mtext

return self

def send(self):

headers = {"Content-Type":"application/json"}

r = self.http.request(

'POST',

f'{self.hookurl}',

body=json.dumps(self.payload).encode('utf-8'),

headers=headers, timeout=self.http_timeout)

if r.status == 200:

return True

else:

raise TeamsWebhookException(r.reason)

def lambda_handler(event, context):

print(event['detail']['requestParameters']['nodegroupName'])

if("r5s-default" not in event['detail']['requestParameters']['nodegroupName']):

return {

'statusCode': 200,

'body': json.dumps('Announcing only default Node Group')

}

stage = os.environ['stage']

webhook_url = os.environ['webhook_url']

f = open("announcement.tpl", "r").read().replace('###STAGE###', stage)

myTeamsMessage = ConnectorCard(webhook_url)

myTeamsMessage.text(f)

myTeamsMessage.send()

announcement.tpl:

<h1><b>r5s Node Rollout gestarted</b></h1>

Soeben wurde auf r5s ###STAGE### ein Node Rollout gestarted (angestoßen durch Updates, Config Changes,...).<br />Environment Varbales:

stage = dev|preprod|prod

webhook_url: <webhook url>

Zum aktivieren

export TF_LOG=DEBUG

export TF_LOG=TRACEZum deaktivieren

unset TF_LOGterraform state list

terraform state list | grep thomas

data.gitlab_user.members["thomas@asanger.dev"]

data.ldap_user.members["thomas@asanger.dev"]

module.upn_lookup["thomas@asanger.dev"].data.http.upn_lookupterraform state rm 'data.ldap_user.members["thomas@asanger.dev"]'terraform state show 'data.gitlab_user.members["thomas@asanger.dev"]'

# data.gitlab_user.members["thomas@asanger.dev"]:

data "gitlab_user" "members" {

avatar_url = "https://gitlab.asanger.dev/505/avatar.png"

can_create_group = false

can_create_project = false

color_scheme_id = 0

external = false

id = "505"

is_admin = false

name = "Asanger, Thomas"

namespace_id = 0

projects_limit = 0

state = "active"

theme_id = 0

two_factor_enabled = false

user_id = 505

username = "asangert"

}terraform state show 'gitlab_group_membership.maintainers["thomas@asanger.dev"]'

# gitlab_group_membership.maintainers["thomas@asanger.dev"]:

resource "gitlab_group_membership" "maintainers" {

access_level = "maintainer"

group_id = "6911"

id = "6911:505"

skip_subresources_on_destroy = false

unassign_issuables_on_destroy = false

user_id = 505

}terraform import -var-file ../../compiled/mp_content/team.tfvars 'gitlab_group_membership.maintainers["thomas@asanger.dev"]' "6911:505"echo 'password' | kapitan refs --write awskms:tokens/grafana_ng_admin_password --key alias/r5s-kapitan -f -

kapitan refs --reveal --ref-file deploy/refs/tokens/grafana_ng_admin_password